Nvidia Near-Eye Light Field Display: Background, Design and History [Video]

About a year ago, Nvidia presented a novel head-mounted display that is based on light field technology and offers both depth and refocus capability to the human eye. Their so-called Near-Eye Light Field Display was more a proof of concept, but it’s exciting new technology that solves a number of existing problems with stereoscopic virtual reality glasses.

About a year ago, Nvidia presented a novel head-mounted display that is based on light field technology and offers both depth and refocus capability to the human eye. Their so-called Near-Eye Light Field Display was more a proof of concept, but it’s exciting new technology that solves a number of existing problems with stereoscopic virtual reality glasses.

Nvidia researcher Douglas Lanman recently gave a talk at Augmented World Expo (AWE2014), in which he explained the background and evolution of head-mounted displays and the history and design of Nvidia’s near-eye light field display prototypes:

In the second half of his talk, Lanman describes the two Nvidia prototypes more closely:

He shows an “light valve technology” LVT film-based prototype (light valve technology), in which a piece of laser-exposed film mounted on an Android tablet simulates a 4×4 cm 8K display (ultra-high definition). Actual 8K displays are expected to become available within 3 to 5 years.

The other prototype is the binocular OLED-based prototype that we’ve seen at SIGGRAPH 2013. It was created from a refurbished Sony HMZ-T1 3D headset comprising two 1-inch 720p OLED panels. The device was disassembled, fitted with a microlens array, and repackaged in a custom 3D-printed enclosure. While one display in the original Sony optical setup weighs about 65 g and contains 4 internal lenses, the modified version with a microlens array weighs only 0.7 g and is much cheaper to build.

In comparison with existing displays, near-eye light field displays can be made extremely thin and lightweight. They also enable a much wider field of view, resulting in a more immersive user experience. Another important advantage is comfort: the glasses can accommodate for near- and far-sighted vision by adjusting software parameters, and they don’t create an accommodation-convergence conflict – when your eyes are trying to focus on something that seems farther away due to stereoscopic depth, they focus behind the actual display and create a blurry image – and thus don’t cause nausea.

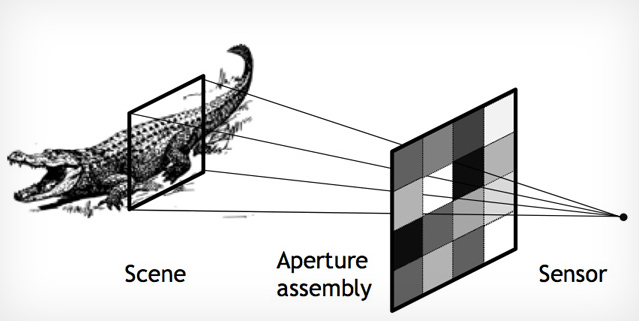

However, there are also limitations at this point: The use of microlens arrays reduces spatial resolution by a factor of 5 or 6 (in the current prototype; Lytro cameras have a factor of about 10), but this problem will be solved as the resolution of OLED panels increases. The glasses also require larger microlens arrays, and need user calibration to provide an optimal view to the eyes.

Using today’s technology (as demonstrated with the second prototype) allows for spatial resolutions of about 150 – 200 pixels across and a field of view (FOV) of 40 degrees. However, Lanman notes that high-resolution glasses with up to 80 degrees FOV should become a reality within three to five years.

Putting the hardware aside for a moment, the rendering of images for light field displays is also possibly resource-intensive. However, Lanman and colleagues showed that “almost any game engine” can be modified to support stereoscopic light field rendering (via ray tracing) on a standard PC, with just 50 lines of code.

When asked about Augmented Reality, Lanman teased a new “pinlight display AR” technique which was apparently live-demoed at SIGGRAPH 2014, and should be published soon.

This is very interesting. What ever came of this tech?

Where do we get these micro lens array for experimenting? I find them some places but too very expensive?