A New Class of Light Field Sensor: Researchers Unlock Light Field Features in Optical Fiber Bundles used for Endoscopy

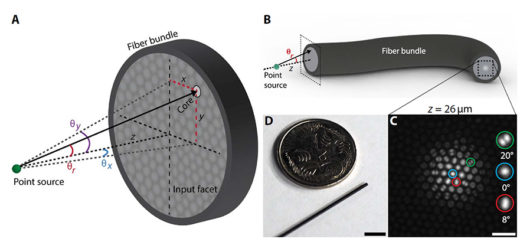

Optical fiber bundles are a common tool to look into hard-to-reach areas of the human body, and (micro-)endoscopy enables efficient and minimally invasive medical diagnoses. An optical bundle consisting of 30,000 fibers theoretically captures...

Recent Comments