The Wonder Camera: Canon’s Concept Camera of the Future

At World Expo 2010, the Japanese pavilion held something special for all the photography and gadget geeks out there: Canon demonstrated their vision of what cameras will be able to do 20 years from now, and called it the “Wonder Camera Concept”.

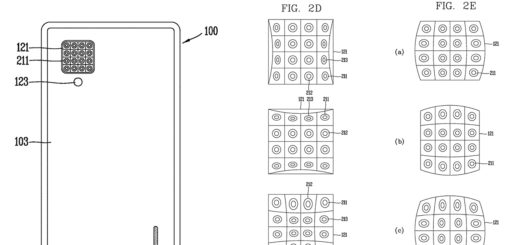

The camera, which was live demonstrated in front of a giant 12,288 x 2,160 pixel Panasonic display, showed the next big steps of just about every aspect of a camera: increased image resolution, super high zoom, better image stabilization, more intelligent algorithms, and lots and lots of processing power.

With the Wonder Camera, Canon basically got rid of still imaging per se, and instead created a camera that records video at such high quality that users will be able to pick the best single frame at any time and use it as a still photo. (Of course, we’re quite aware that it would take some time to sift through all the data after, say, a safari…)

A zoom lens that was estimated to be around 3,000 to 5,000 mm in focal length was hand-operated on stage, but the image stabilizing technology behind it made for a very stable image. Zooming in on pictures and videos after the fact was also shown to be possible, several orders of magnitude beyond anything that’s available today (07:15 in the video).

Adjusting focus will also be a problem of the past, according to the world’s biggest camera manufacturer: “Canon claims to have a proprietary technology that enables everything in every frame to be in focus at all times.” (LightField technology, anyone?)

As you can see in the video, the technology already seemed to work. This is pretty impressive, especially since it was just considered a concept camera.

In some scenes, however, you can see that the woman handling the camera is also wearing a laptop-sized backpack with cables leading to the camera. While the processing power is obviously already available today, it doesn’t quite fit into a camera yet.

This is a poor unimaginative view of the future of photography. Faster, stabler, sharper better better better etc. Mearly advancing existing technologies is boring and short sighted, particularly when looking back at the progress in the last 20 years.

In 20 years time, every display surface will have a wide area integrated stacked dynamic coded aperture surface buried beneath it, using no moving parts and no lens. These will capture the entire light field allowing reproduction of depth as well as space within a perspective range. The ‘aperture’ field will be dynamically reconfigurable to act as a wide field camera, telescope, 1:1 isometric device, microscope or simulatnious combinations for different regions. Very close occluding objects will be both captured and removable from the imagespace behind. Spatial resolution will be dynamically allocatable. Compressed sensing will be directly integrated with feedback loop control on resolution allocation. Fovean type doped solid state detectors with femtosecond laser etched nano-sturctures will capture a continuous spectrum across the image field, extending high into UV and low into Infra-Red and at ultralow light levels. The captured light will be harvested to energise the device. The entire dataset will be continuously compressed with wavelet schemes extending into the angular, temporal and spectral domains and be streamed continuously over a spatio/rotational polarisation multiplexed mesh networked 5G connection to a limitless cloud storage space. The image plane will be reconstituted on the fly from any perspective array and fed back to the display, which itself will provide an eye-tracked and plenoptic controlled holographic like reconstruction at higher resolutions than a human can see. It will use mirasol microinteferometer pixels to reroute ambient light for high intensities visible in direct light without active power. In the cloud the image space will be integrated with all other capture devices in the vicinity providing a continuously reconstructable world view from any perspective all the time, and time-machine functions to reconstruct viewspaces from the past as it’s already been captured. With the new data algortihms in the cloud will be able to isolate, identify and visually reproduce any object ever. That’s where we’re headed. All these technologies exist separately already. Some are already in military and/or industrial usage. I fail to see how they couldn’t make it into every phone in the next 20 years, except for, you know, the fact that phones won’t exactly exist.

Wow Elmer… sound like you know lots about optics. Good posting with interesting insights!

Peter