MIT Boosts Efficiency of Lensless Single Pixel Cameras by Factor 50

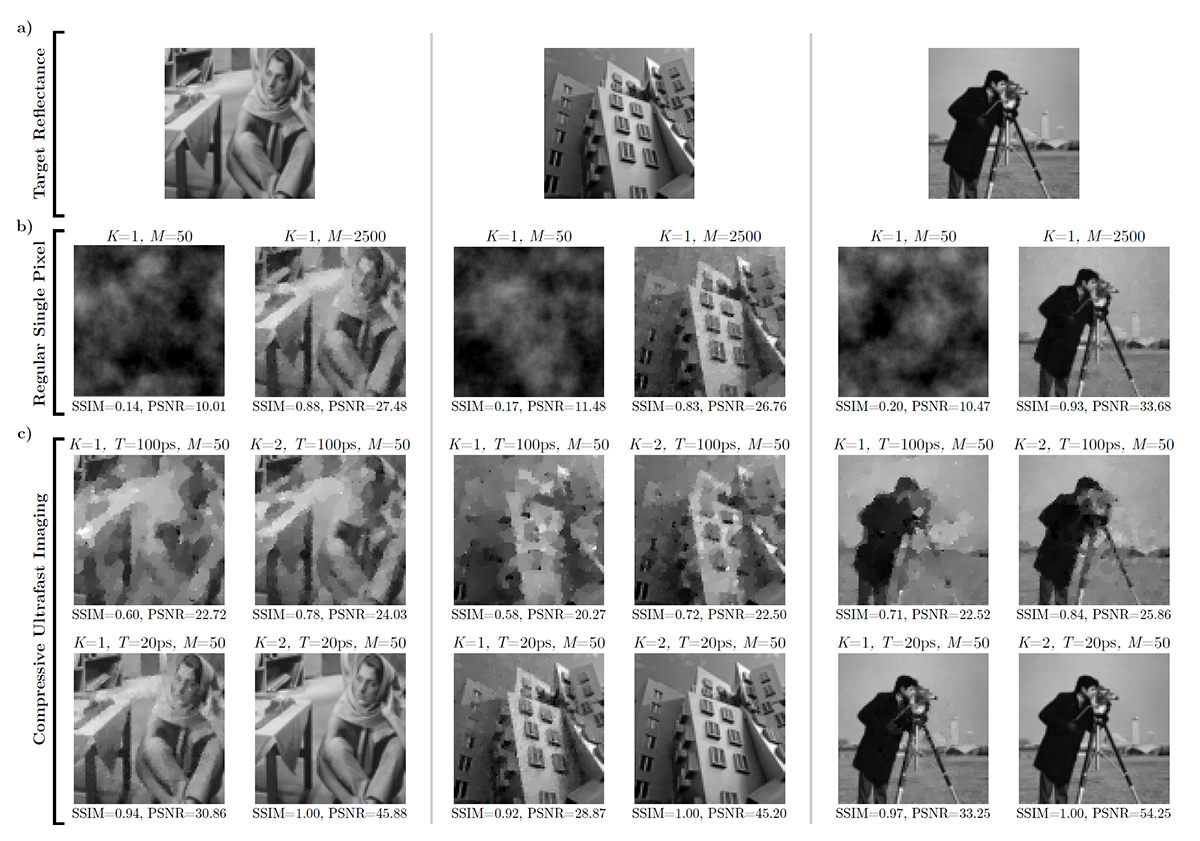

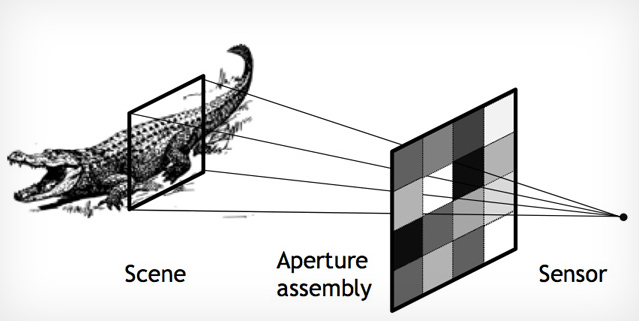

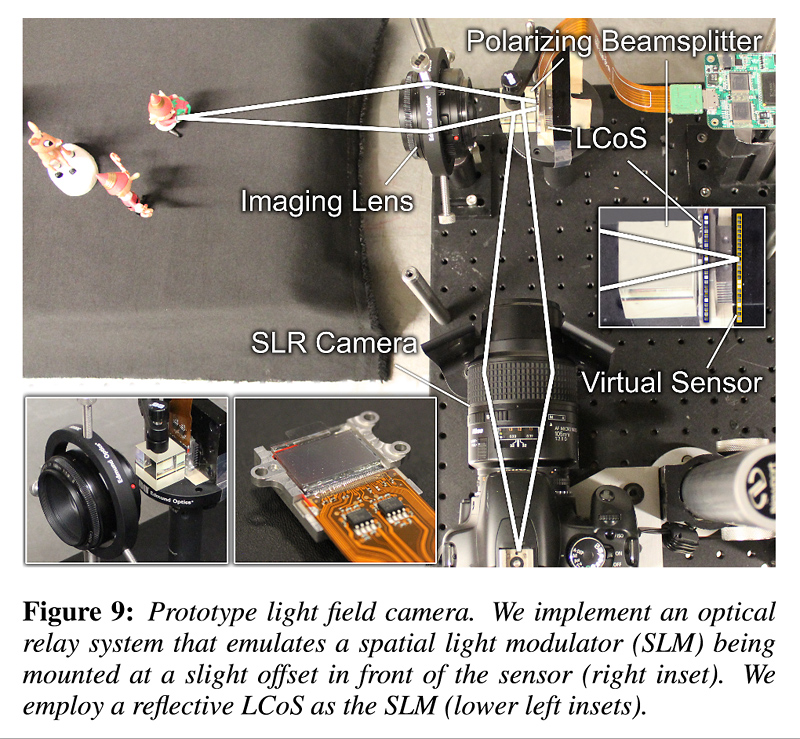

Today’s conventional cameras require a set of highly precise lenses and a large array of individual light sensors. This general blueprint limits the application of cameras for new uses, e.g. in ever-thinner smartphones, or...

Recent Comments