Nvidia Near-Eye Light Field Display: Spherical Waves improve Holographic Rendering

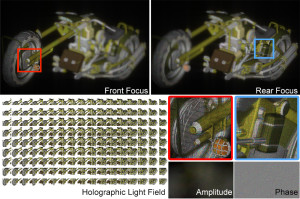

Back in 2013, Nvidia presented a near-eye light field display which for the first time allowed a Virtual Reality experience where the user could actively focus on objects at different dephts. This technique enables a more life-like experience compared to today’s VR headsets, where everything is in focus. As with any first-generation prototype, there were strong limitations including a strong trade-off between spatial and angular resolution, and visual artefacts.

Back in 2013, Nvidia presented a near-eye light field display which for the first time allowed a Virtual Reality experience where the user could actively focus on objects at different dephts. This technique enables a more life-like experience compared to today’s VR headsets, where everything is in focus. As with any first-generation prototype, there were strong limitations including a strong trade-off between spatial and angular resolution, and visual artefacts.

At last year’s Siggraph conference on computer graphics, the researchers around David Luebke at Nvidia and MIT SCAIL introduced a new technique of rendering that prevents many of these limitations, by rendering output using spherical waves and co-modulating both phase and amplitude. This leads to better and more efficient output of Holograms.

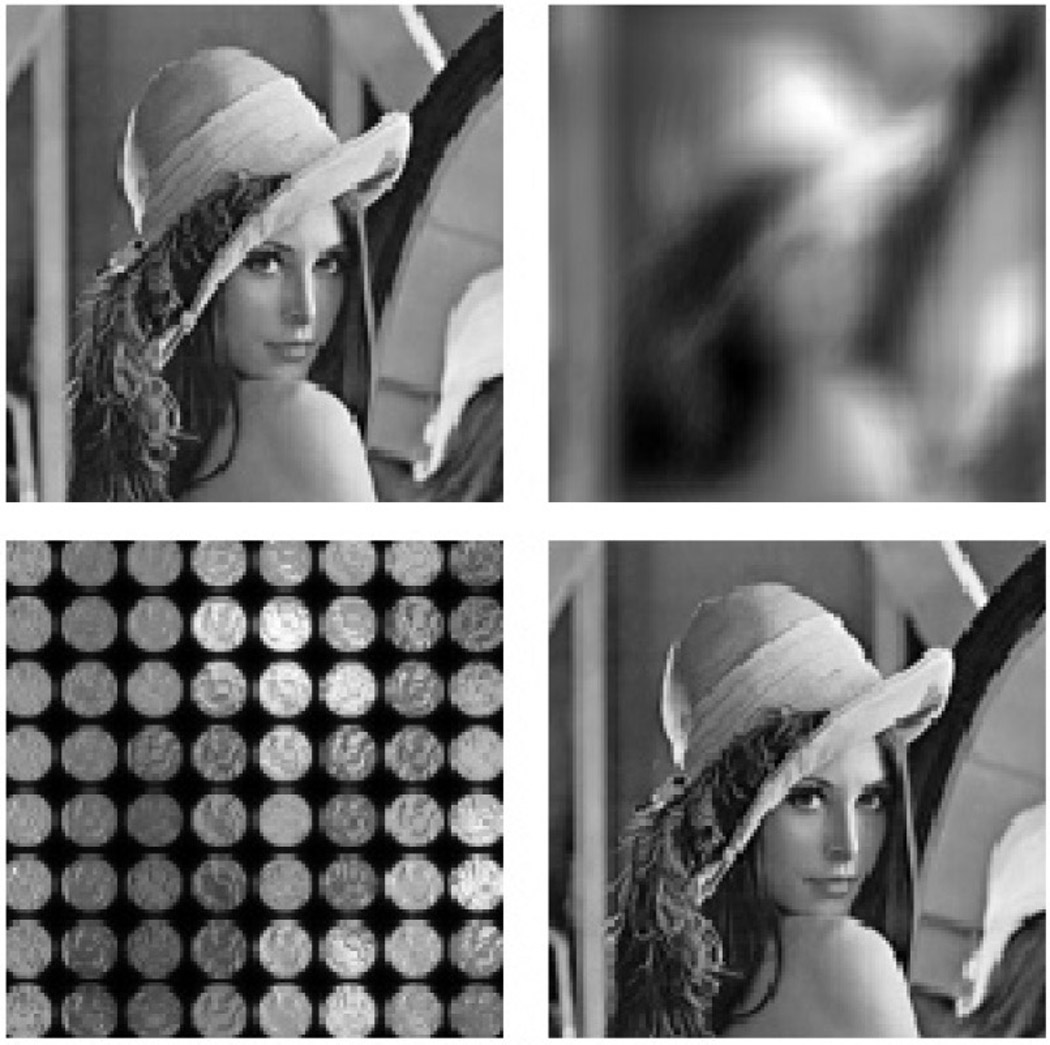

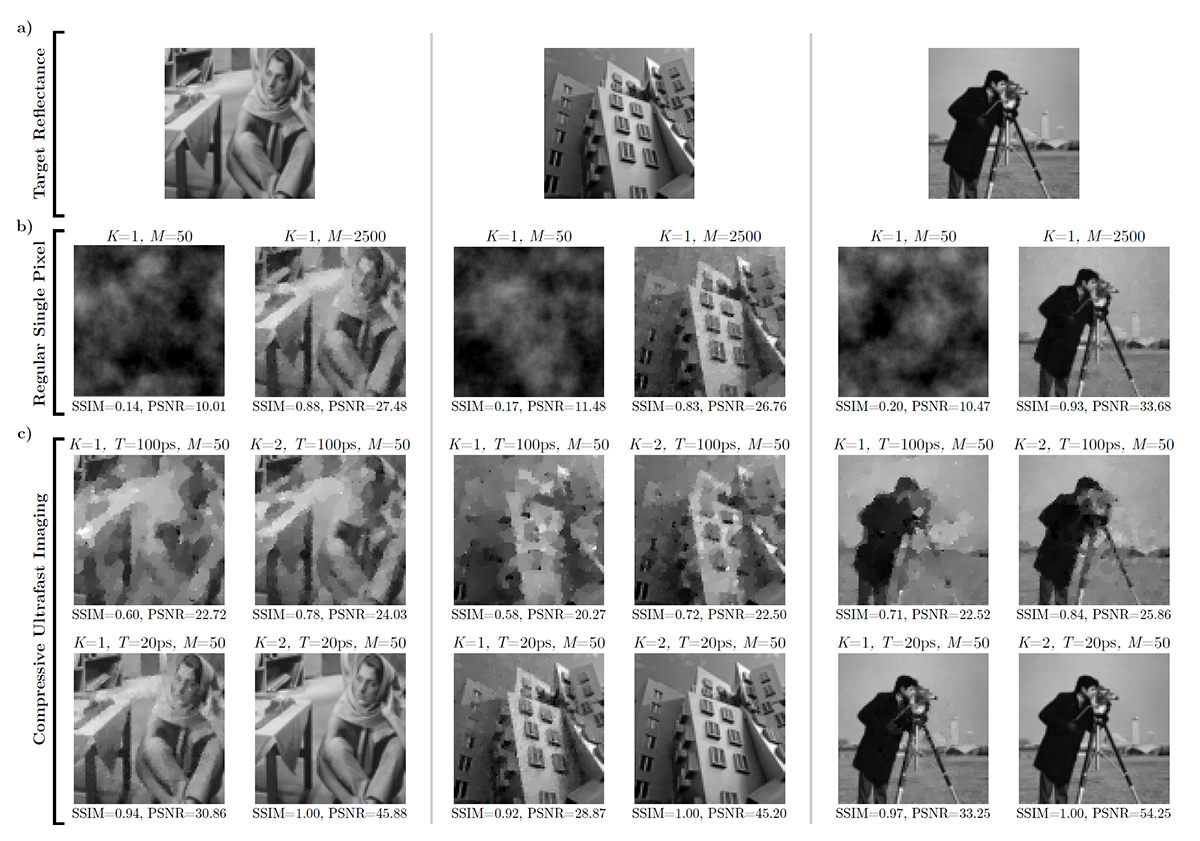

Holograms have high resolution and great depth of field allowing the eye to view a scene much like seeing through a virtual window. Unfortunately, computer generated holography (CGH) does not deliver the same promise due to hardware limitations under plane wave illumination and large computational cost. Light field displays have been popular due to their capability to provide continuous focus cue. However, light field displays suffer from the trade offs between spatial and angular resolution, and do not model diffraction. We present a light field based CGH rendering pipeline allowing for reproduction of high-definition 3D scenes with continuous depth and support of intra-pupil view dependent occlusion. Our rendering accurately accounts for diffraction and supports various types of reference illumination for holograms. We prevent under- and over-sampling and geometric clipping suffered in previous work. We also implement point-based methods with Fresnel integration that are orders of magnitude faster than the state of art, achieving interactive volumetric 3D graphics. To verify our computational results, we build a see-through near-eye color display prototype with CGH that enables co-modulation of both amplitude and phase. We show that our rendering accurately models the spherical illumination introduced by the eye piece and produces the desired 3D imaginary at designated depth. We also derive aliasing, theoretical resolution limits, depth of field, and other design trade-off space for near-eye CGH.

Recent Comments