What is the Light Field?

The light field consists of the total of all light rays in 3D space, flowing through every point and in every direction.

Everything we can see is illuminated by light coming from a light source (e.g. the sun or a lamp), travelling through space and hitting surfaces. At each surface, light is partly absorbed, and partly reflected (or sometimes refracted) to another surface, where it bounces again, until it finally reaches our eyes.

What exactly we can see depends on our precise position in the light field, and by moving around in it, we can perceive part of the light field and use that to get an idea about the relative position of objects in our environment.

Scientifically speaking, light rays are described by the 5-dimensional plenoptic function: Each ray is defined by three coordinates in 3D space (3 dimensions) and two angles to specify their direction in 3D space (2 dimensions).

However, when measuring a light field using cameras, light rays can be assumed to have constant radiance along their path. Removing this redundant information from the 5D function, we are left with the 4D light field.

Check out Wikipedia’s Light Field article for a more detailed description of the light field and the plenoptic function.

How to Record a Light Field

A traditional camera captures only a flat, two-dimensional representation of the light rays reaching the lens at a given position. The 2D image sensor records the sum of brightness and colour of all light rays arriving at each individual pixel.

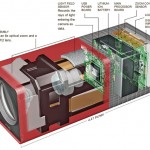

In contrast, a light field camera can record not only brightness and colour values in the 2D imaging sensor, but also the direction/angle of all the light rays arriving at the sensor. This additional information allows us to reconstruct where exactly each light ray came from before reaching the camera, making it possible to calculate a three-dimensional model of the captured scene.

Light field volumes can be captured using several techniques, including…

- a single, robotically controlled camera

- a rotating arc of cameras

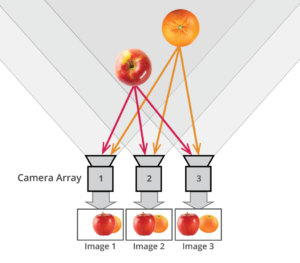

- an array of cameras or camera modules

- a single camera or camera lens fitted with a microlens array

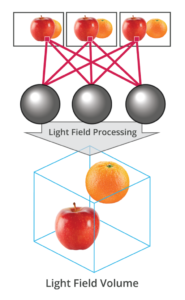

Light Field Processing

Depending on the technique used, the captured image data typically consists of an image containing many sub-images (from a single sensor and microlens array) or many images (from multiple cameras or exposures). The common feature of both datasets is that the sub-images or individual images differ slightly from each other because they captured light rays from slightly different positions in space.

The difference – or disparity – between pixels in the (sub-)images can be used to compute the colour and angularity information of the recorded light rays, making it possible to calculate each object’s position in space and its distance from the lens or camera. This information is then processed to compute a light field volume, containing a 3D model of the recorded scene.

What can you do with Light Field Technology?

Capturing a light field volume, rather than just a 2D image, opens up entirely new possibilities for imaging: The rich light field data allows us change fundamental features of an image, after it is taken: You can change what parts of the image should be in focus or blurry, adjust optical parameters such as the depth of field, change the perspective within the limits of the lens (or camera array), or even create 3D pictures from a single exposure.

Have a look at Light Field Features for more details.

Recent Comments